In five minutes to catch up on ChatGPT

You may have already tested it or even used it for work or study: ChatGPT. The tool is becoming more and more prominent. But what exactly is this super chatbot? And more importantly, what can it not do? Maarten Smeets and Steven Grond from AMIS | Conclusion found out for you.

February 1st, 2023 | Blog | By: Conclusion

Share

ChatGPT in a nutshell

Technically, GPT is a generative AI model and ChatGPT is a chat user interface layer. There are various models that are trained on large datasets and generate new data such as text, images or audio. In this regard, (Chat)GPT is not unique, DALL-E and StableDiffusion are well-known examples for image creation.

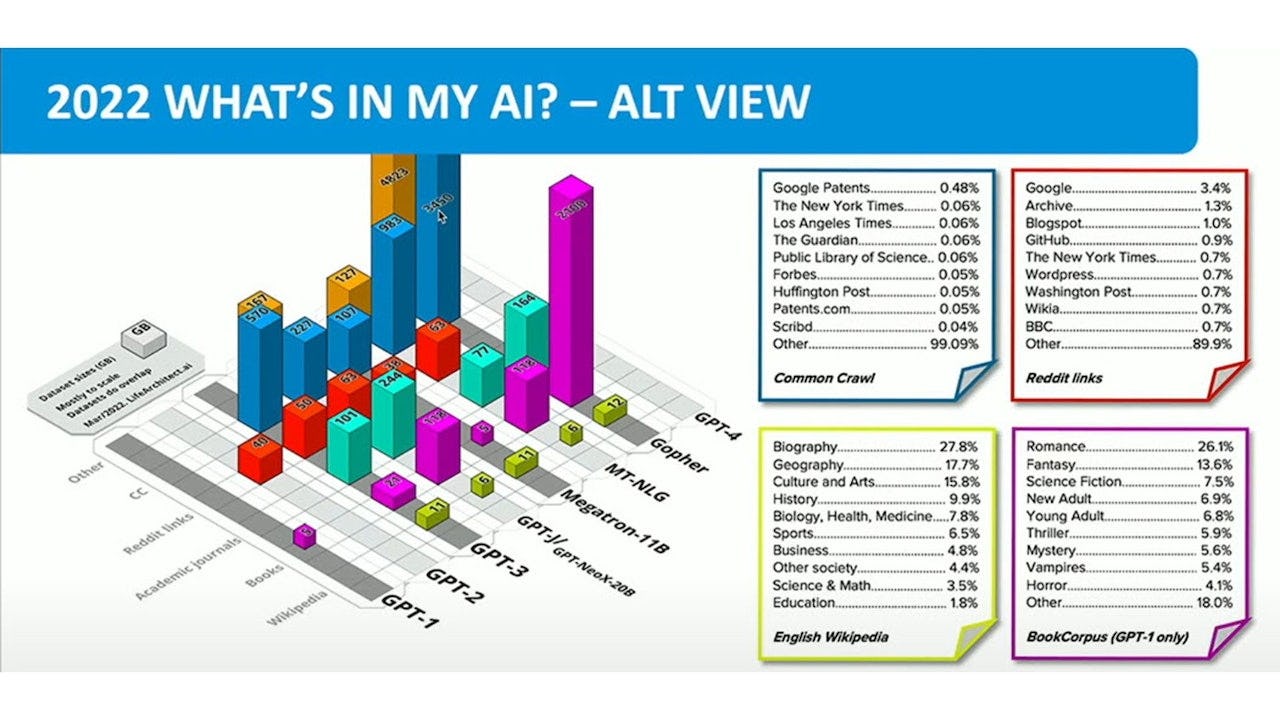

We are specifically talking about OpenAI GPT. OpenAI was founded in late 2015 by Elon Musk, among others, and originally focused on research. Musk stepped down from the board in early 2018 due to differences of opinion. GPT-1 became available in 2018, trained on books. In 2019, GPT-2 was available on an additional basis of Reddit links, and in 2020 GPT-3 was trained on Wikipedia, books, academic journals, Reddit links, and Common Crawl. Common Crawl is a kind of public database with Petabytes (= thousands of terabytes) of data collected over 12 years of internet crawling. The GPT variant we are now using is GPT-3.5 and dates from early 2022. ChatGPT itself was launched in late 2022.

ChatGPT was trained with 10,000 graphics cards (NVIDIA Tesla A100 GPU) fed with a huge amount of input from the internet. This resulted in a moment in time (rough estimate of a month) in the GTP-3.5 model. For comparison, if you had wanted to train ChatGPT-3.5 with one graphics card, it would have taken approximately 355 years to train the model!

Generative Pretrained Transformer (GPT)

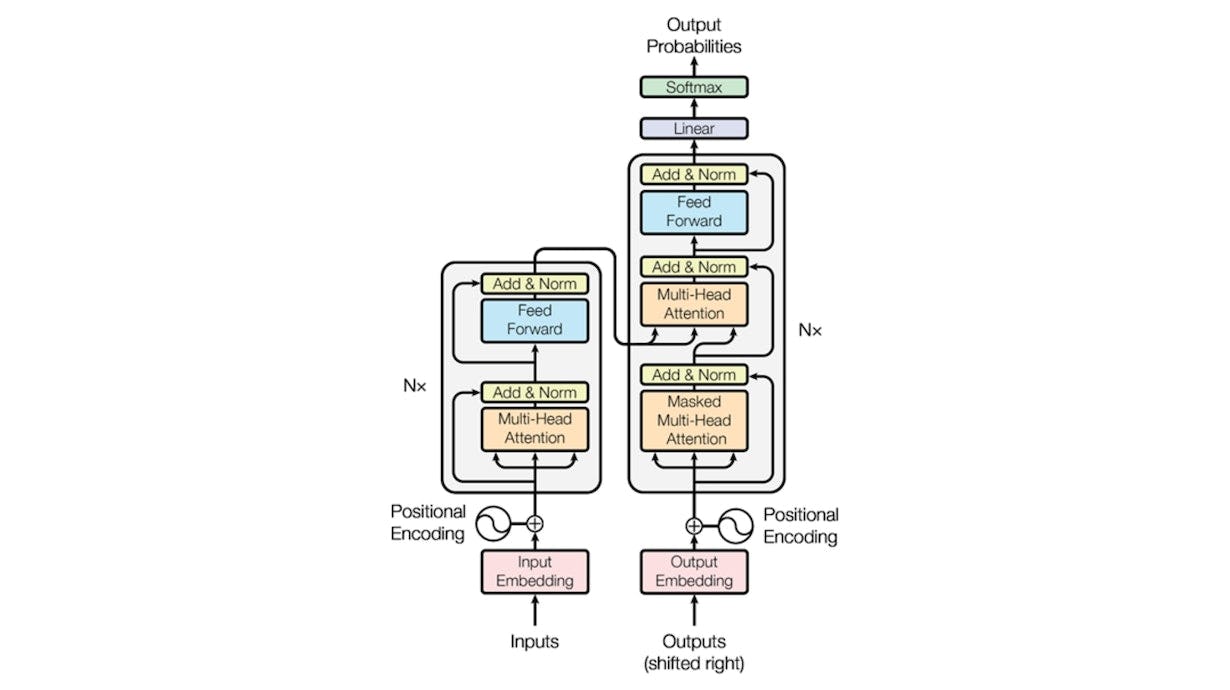

GPT is a deep learning model based on the transformer architecture, which is essentially the successor to Recurrent Neural Network (RNN). Unlike RNNs, GPT can be trained in parallel and deliver parallel outputs due to three key elements: positional encoding, which allows the model to learn the order of words by numerically encoding them; attention, which allows the model to weigh all inputs and predict part of the input in parallel; and self-attention, which enables the model to understand and account for context in the input, such as the presence of words like 'no' or 'not', in its output."

What can ChatGPT do?

A lot! It's a search engine on steroids. You can have it generate instructions, manuals and step-by-step plans. It can generate texts, translate and reformulate. It can write code, explain what a code does, generate test data, make music (at least, based on text-based note notation), validate advice from experts. ChatGPT also collects the context of the entire conversation, allowing you to follow up and build on previous answers. It can even help you with relationship advice (at your own risk). However, there is a warning of profit: not everything that ChatGPT spits out is correct. More about that later.

Maarten and Steven also found out about copyright. Who is actually the owner of the AI's output? It turns out that you are, since AI cannot have copyrights. And with the creativity you put into the question, the unique output, and what you then do with the output, you make it completely your own. Keep in mind: if the GPT produces content that is traceable to a specific source, under US law the original author has the copyright. So don't count yourself rich too soon!

What can ChatGPT not do?

This all-rounder has its weaknesses, even in the things it can do well. Fun fact: You can ask ChatGPT what it is bad at. For example, it does not always understand the correct context or sarcasm and struggles with subjectivity, common sense, and abstraction. But there are other drawbacks:

-

ChatGPT is closed source, so it cannot be installed locally. You give all the input to OpenAI and indirectly to Microsoft, as ChatGPT runs on Azure.

-

It cannot interact with the physical world, only simulate.

-

The dataset it currently uses is until September 2021. It does not know about anything after that. For example, it doesn't know about the war in Ukraine.

-

You cannot validate the sources it uses in its answers to your questions.

-

ChatGPT is not sustainable, there is a substantial price tag and the necessary CO2 emissions associated with training and using models like this. But that's also the case with a simple Google search.

-

Ethical questions, can everything be done with it? Should we warn students about the use of ChatGPT, or should we help them use it as well as possible? This all remains to be seen.

The future of ChatGPT

We expect GPT-4 to arrive by the end of 2023. There are many rumors about the update, but very little is known for sure. In addition, OpenAI is working on video generation. Microsoft is working on integrating ChatGPT with Microsoft Bing and voice generation (VALL-E). Artificial General Intelligence (AGI) - AI that can learn human cognitive tasks - is still waiting in the wings.

More information about ChatGPT?

Curious about what ChatGPT can do for your work? Feel free to contact us.